Artificial intelligence is currently the fastest growing field of computer science, arousing as much interest as concern. Although AI has been in the news for quite some time, the first perceptron, i.e. the simplest neural network model, was created as early as in 1957!

Less than 30 years later, in 1987, to be exact, D.E. Rumelhart, G.E, Hinton and R.J. Williams developed a multilayer perceptron model. The three gentlemen were the first to describe a then novel procedure for educating neural networks using a back propagation algorithm to train multilayer neural networks. Their concept itself included an additional “hidden layer” of “connection weights of processing elements”, and the described learning algorithm determined the direction in which the mentioned “weights” should be modified in a given iteration in order to reduce the error made by the network. A weight is nothing more than a coefficient (a fraction, i.e., a numerical quantity/ratio characterizing the relationship between the given quantities/data input to the network), and a neural network is de facto a function or, in simpler terms, an operation of adding and multiplying a matrix.

The aforementioned publication boasts more than 14,500 citations, it has been downloaded more than 100,000 times and published in one of the most prestigious scientific periodicals – Nature. It is no surprise then that in 2018 E. Hinton became one of the recipients of the Turing Award, whose prestige is comparable to that of the Nobel Prize, and whose winners are behind the greatest achievements in the field of computer science. The award has been presented annually since 1966 by the ACM community and funded by Google (its worth amounts to 1 million USD). Apart from Hinton, Y. Bengio and Y. LeCun were also awarded and they are considered the fathers of deep neural networks.

The beginnings of neural networks, however, date back to even earlier times in the development of science. W. S. McCulloch and W. Pitts, in a publication entitled “A logical calculus of the ideas immanent in nervous activity” introduced a mathematical model of adaptable weights (on which the perceptron is based), but without the option of “learning”, i.e. self-modification of these “weights”. You can read the original publication here; please note the date of publication: 1943.

Patent protection – does it cover AI?

Computer programs can be patented (under certain conditions). Until recently, they were excluded from patent protection per se and, as such, continue to be; however, the technical effect obtained through them is no longer excluded. It is therefore possible to obtain a patent for computer-aided inventions and, for example, “software that produces a technical effect.” How long can a patent be obtained for? In principle, for as long as 20 years!

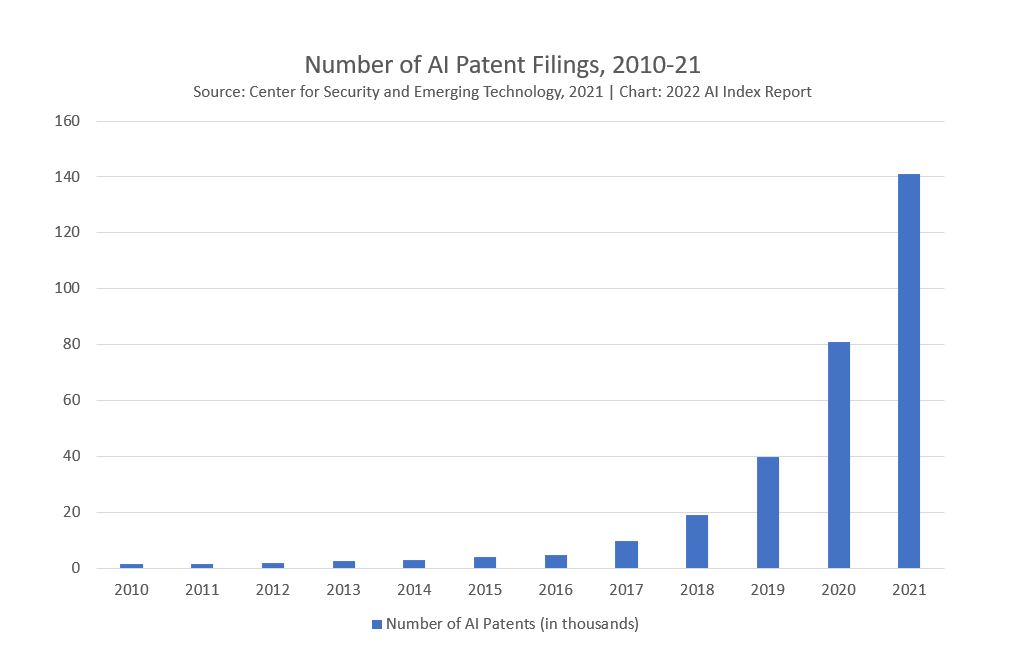

Since 2016/2017, the number of patent applications exploiting AI has grown dramatically on a global scale (thanks both to technological innovation and changes to the law). According to official reports, in 2021 the number of patent applications had already exceeded 140,000 worldwide, and this (i.e. patentability and the number of applications) raises numerous new and unlimited opportunities, as well as the already mentioned concerns and risks.

Zhang, N. Maslej, E. Brynjolfsson, J. Etchemendy, T. Lyons, J. Manyika, H. Ngo, J. C. Niebles, M. Sellitto, E. Sakhaee, Y. Shoham, J. Clark, R. Perrault, “The AI Index 2022 Annual Report,” AI Index Steering Committee, Stanford Institute for Human-Centered AI, Stanford University, March 2022.

How to gain patent protection – what to invest in

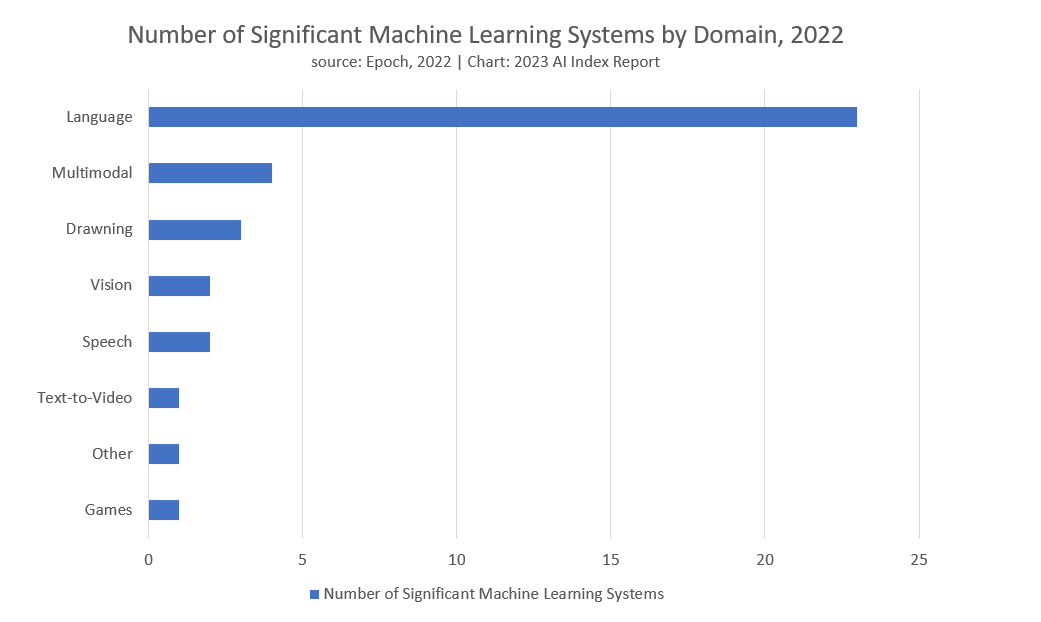

Currently, the fastest growing branch of artificial intelligence is constituted by generative models, i.e. those that create new content based on probability. The possible potential of such models and their use is enormous. The development of this technology was made possible by the transformer model and the introduction of the attention mechanism to the encoder (see “Attention Is All You Need,” December 5, 2017). The publication of the algorithm principle gave rise to the development of many models dealing with Natural Language Processing, the so-called NLP. Currently, OpenAI’s chatbot – ChatGPT – is the most widespread, although not the only one, as can be seen from the chart below.

Maslej, L. Fattorini, E. Brynjolfsson, J. Etchemendy, K. Ligett, T. Lyons, J. Manyika, H. Ngo, J. C. Niebles, V. Parli, Y. Shoham, R. Wald, J. Clark, R. Perrault, “The AI Index 2023 Annual Report,” AI Index Steering Committee, Institute for Human-Centered AI, Stanford University, Stanford, CA, April 2023.

According to the latest guidelines on patenting computer programs, issued by the President of the Patent Office of the Republic of Poland (UPRP), for a computer program to be considered an invention and receive patent protection, it must exhibit technical effects, but it cannot acquire a technical character only by virtue of it being automatically executed by a computer. “Further technical aspects” are required, i.e. such aspects that are related to the technical considerations of the inner workings of the computer, and this cannot mean the algorithm itself that performs a specific task. Therefore, a patent attorney familiar with the requirements for computer-assisted inventions is of invaluable help, when it comes to determining the scope of possible protection.